It’s not news anymore that LLMs and AI search are fundamentally reshaping how content is ranked and surfaced. As traditional SEO gives way to Answer Engine Optimization (AEO), understanding what drives visibility in AI-generated responses is critical for organic growth.

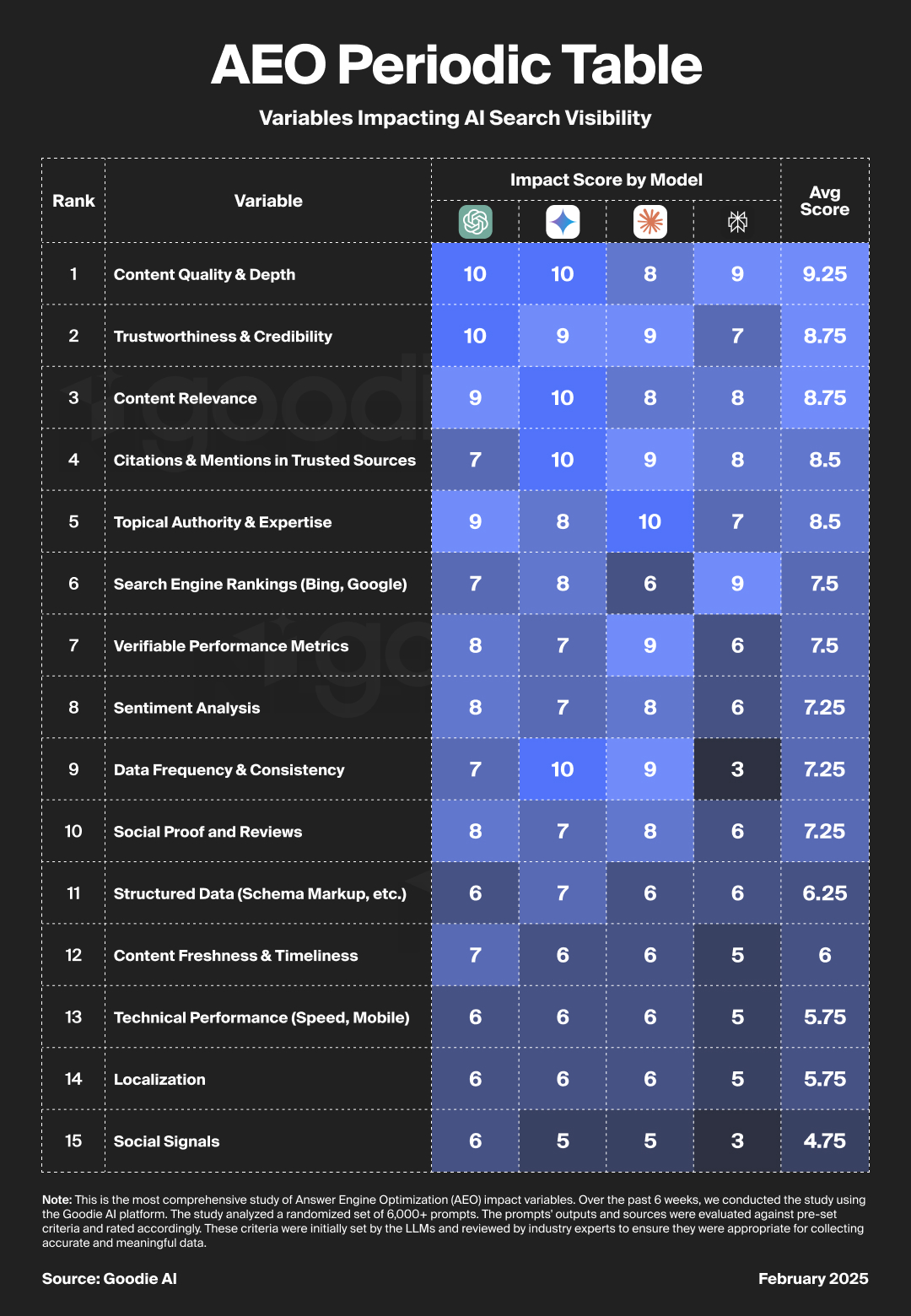

To quantify which factors influence AI search rankings, we conducted a six-week study using Goodie AI, analyzing 6,000+ randomized prompts across ChatGPT, Gemini, Claude, and Perplexity. The study evaluated AI-generated responses based on pre-set ranking criteria reviewed by industry experts to identify the most influential variables shaping AI search visibility.

Models covered in this study:

The findings reveal 15 core AEO impact factors, ranked by their relative influence across AI models.

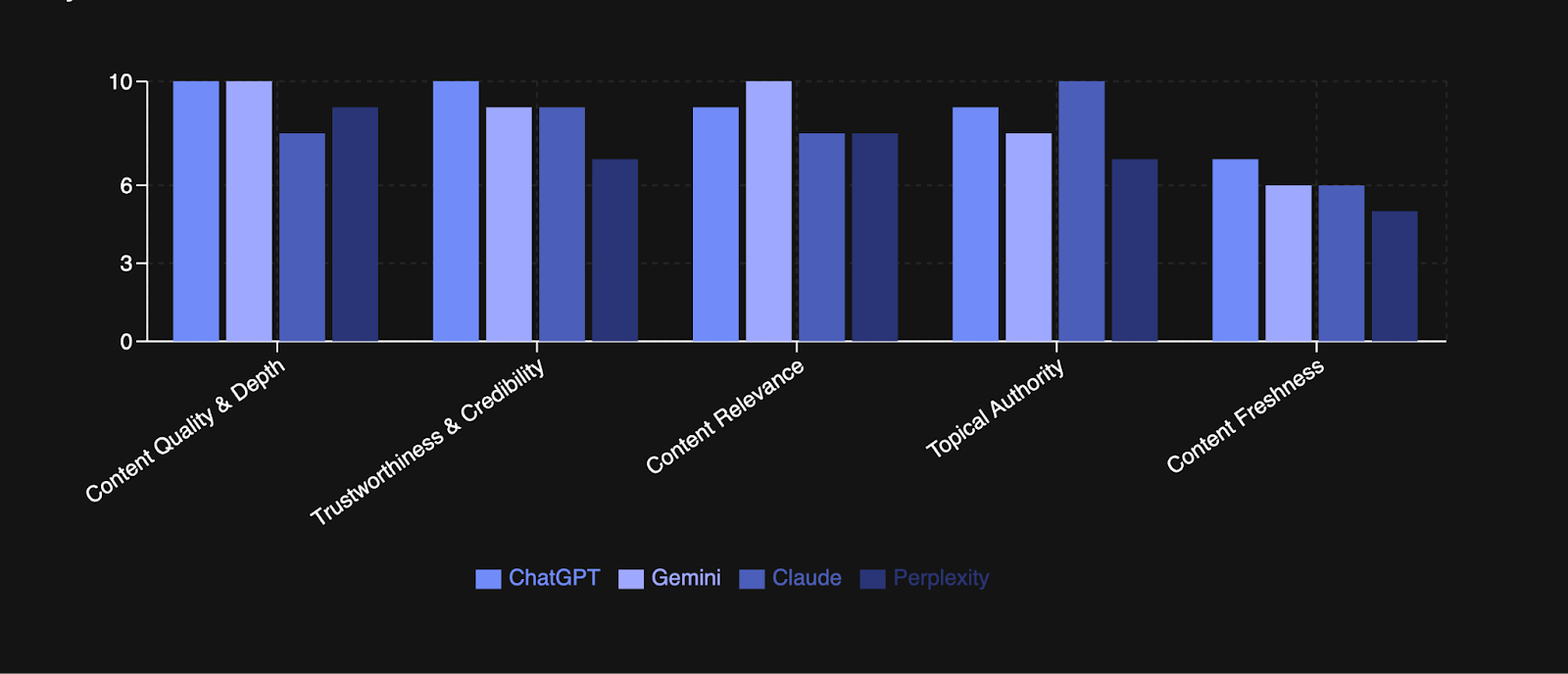

Across all models, content quality and depth emerged as the most critical factor in determining visibility. AI engines prioritize well-structured, comprehensive, and nuanced content over surface-level or keyword-stuffed pages.

🔍 Insight: AI models are optimizing for highly detailed and informative content that provides direct, well-supported answers to user prompts.

AI search engines favor sources that demonstrate authority, emphasizing third-party validation through:

ChatGPT weighted trustworthiness at a perfect 10, while Claude and Gemini closely followed. Perplexity, on the other hand, scored it 7, suggesting a lower reliance on formal credibility signals.

🔍 Insight: AI models assess the reputation and credibility of sources rather than just indexing popular content.

Relevance is crucial, but it’s more than just keyword matching. AI models analyze semantic alignment with user intent.

🔍 Insight: LLMs excel at delivering personalized, relevant answers to user prompts. Content that directly addresses user intent and and aligns with the context of the query consistently outperforms pages optimized for generic keywords.

AI models prioritize sources cited by reputable publishers like Wikipedia, academic journals, and well-regarded news outlets.

🔍 Insight: Given LLMs reliance on RAG sources for real-time retrieval, this is one of the foundational and most impactful variables. Brands and publishers looking to increase AI visibility need to secure relevant citations from trusted industry sources that are frequently cited by each model.

AI models tend to favor subject-matter experts with niche focus. Content from widely recognized industry leaders tends to surface more frequently.

🔍 Insight: Niche expertise matters. Publishing deep insights within a field increases visibility across AI models.

Despite AI search evolving beyond traditional SEO, existing search rankings still play a role:

🔍 Insight: Conventional SEO remains relevant, but AI search engines don’t rely solely on traditional search rankings to determine credibility.

Claude places high importance on measurable results—case studies, statistics, and quantifiable success metrics boost credibility.

🔍 Insight: Content that demonstrates clear, verifiable impact is prioritized over vague or unsupported claims.

AI models analyze public sentiment by assessing reviews, ratings, and user feedback across platforms.

🔍 Insight: Positive reviews and user perception of credibility influence AI search ranking, but not as much as direct citations.

ChatGPT, Gemini and Claude prioritize frequent and consistent mentions of a source across multiple high-quality references.

🔍 Insight: Consistency reinforces credibility but is not a substitute for high-quality primary sources.

Social engagement, including Google Reviews, Reddit discussions, and Quora responses, provides additional trust signals.

🔍 Insight: User-generated content contributes to AI ranking decisions, but it is secondary to direct credibility indicators.

AI models benefit from structured data, but it does not drive rankings in the same way it does for traditional search engines.

Regular updates improve engagement but are not a major ranking factor. AI prioritizes accuracy over recency. Freshness often aligns with accuracy.

While fast-loading, mobile-friendly content improves user experience, AI search models rank content on credibility and expertise over speed. Technical performance may not have a direct impact on rankings, but it could impact AI crawlability, which in turn impacts AI models’ knowledge bases.

Localization matters for geo-specific prompts or queries, but global topic rankings rely on the broader impact factors above.

Social media engagement (likes, shares, comments) has minimal direct influence on AI visibility. This is slightly higher for ChatGPT, which may be because Bing (which ChatGPT draws upon) places more emphasis on social signals in its ranking factors.

This study presents the most comprehensive dataset on AEO impact variables to date, confirming that high-quality content remains king. While AEO differs from traditional SEO, there is a clear overlap in visibility factors—content quality, credibility, and domain expertise are the top drivers of AI search rankings.

As AI search adoption increases and LLMs refine their approach, brands must rethink their organic growth, content, and SEO strategies holistically. Adapting to AEO is no longer optional—tracking performance and optimizing for AI search and LLMs is essential given their rapid adoption and inevitable impact on organic visibility.

The era of Answer Engine Optimization is here. Brands that master AI search visibility dynamics will lead the next wave of digital discovery. If you’re looking for an end-to-end platform to boost your brand’s visibility and drive organic growth in AI search, reach out to Goodie—we’re here to help you stay ahead.