Large Language Models like ChatGPT, Gemini, Claude, and Perplexity have transformed the search landscape. For brands, the way that these models reference (or fail to reference) their products and content can have a major impact on visibility, credibility, and customer trust.

This guide walks you through how LLM citations work, why they matter for brand mentions, how to track them, and how to create a strategy that ensures your brand is the authoritative source models pull from. Since LLM brand mentions will only continue to grow in importance, the time to start focusing on the pieces that gain them is now.

An LLM citation is a reference that a large language model includes alongside its generated response, linking or attributing the answer to a specific source.

For example, when you ask a question in Perplexity or ChatGPT’s browsing mode, the model might show clickable footnotes, “source pins”, or an inline list of references at the end. Each citation indicates where the model retrieved or validated the information it presented.

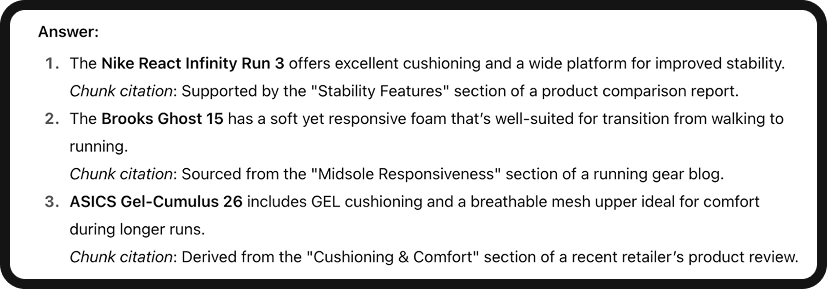

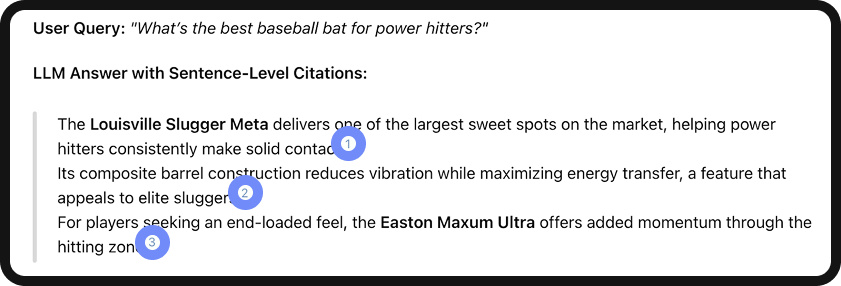

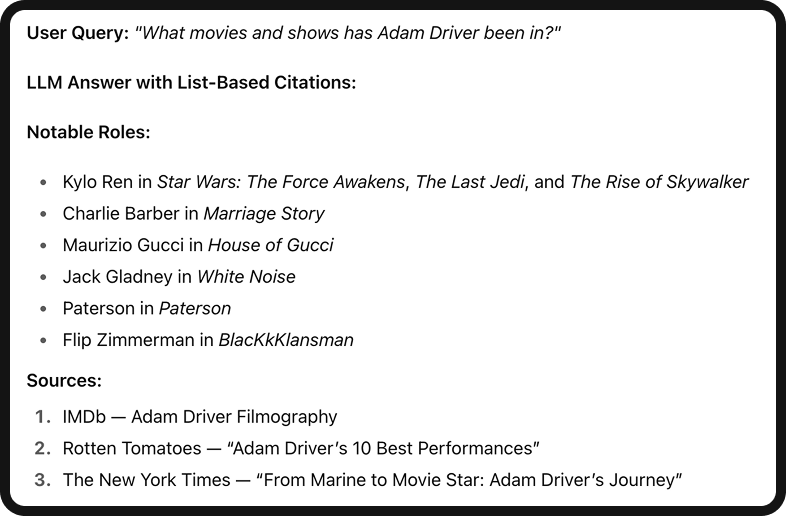

Citations can appear in several forms:

The goal of LLM citations is to provide added transparency to their synthesized answers, help users verify correctness, and mitigate misinformation by letting users see where specific claims originated from.

Yes, LLMs can cite their sources. That being said, how well they cite depends on the model, the data source, and the context of the prompt.

Different models have different citation mechanisms:

In short: not all LLM outputs are equal. Some are grounded in retrieval-augmented generation (RAG) pipelines with explicit source linking; others rely purely on pretraining data and may not produce any citation at all.

Now that we understand what an LLM citation is and how it works, you may be wondering how this could possibly impact your brand. The gist is: when your brand is cited by an LLM, you’re gaining more than just visibility; you’re capturing trust at the exact decision-making moment.

Let’s dive a little deeper into why it matters:

A strong citation presence also helps offset the risk of citation bias: when models repeatedly cite your competitors but not you (even if your content is more accurate).

If you want to optimize for citations, you first need visibility into when, where, and how your brand is being referenced.

Here’s how to track your existing visibility in LLM responses:

Manually test prompts across different LLMs:

Platforms like Goodie track LLM brand mentions and citations across ChatGPT, Gemini, Claude, Perplexity, and others. You can see:

.png)

If AI crawlers like ChatGPT-User or ClaudeBot aren’t accessing your content, you most definitely won’t be getting any LLM citations. Use Goodie’s AI Agent Analytics toolset to monitor bot visits, crawl errors, and blocked assets.

It’s not enough to know you’re cited; you also need to know why, and what the citation is saying:

Once you know where you stand, you can actively work to improve your citation presence. Here’s a step-by-step strategy:

LLMs pull from content that is easy to parse and attribute. To improve retrievability:

Many LLMs weigh domain authority when selecting citations. Backlinks from trusted sites help boost your credibility in retrieval algorithms.

Sometimes LLMs cite you for the wrong reason (or don’t cite you when they should). Combat this by:

Let’s say your brand sells eco-friendly running shoes. You publish a 2025 Sustainability Report with statistics on carbon footprint reduction.

In Month One:

By Month Three:

Citations are the currency of trust in AI search. As LLMs become the default way people discover and verify information, your ability to earn and keep those citations will define your brand’s visibility and credibility.

With the right mix of technical accessibility, content formatting, and ongoing tracking, you can ensure your brand isn’t just mentioned in LLM outputs, but consistently cited as the go-to authority in your space.